Nfs Through a Seperate Vmkernel Adapter

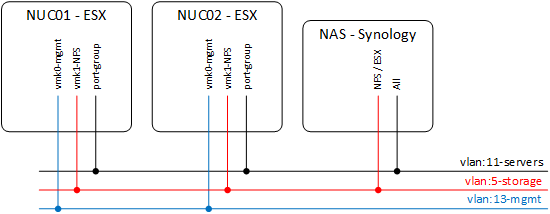

In this post I will describe briefly how I connected my storage to my ESX environment. This because I have multiple subnets, i.e. management & servers. Since my NAS a Synology also runs multiple packages, I decided to place it in the server-subnet. In the initial set-up of my ESX servers I have place them in the management-subnet (vmkernel port). This means that when traffic from management-subnet needs to go to the server-subnet, it needs to be routed. The routing is done at a firewall, which also introduces a bit more latency.

It is not that the latency a real problem is, in this environment, but the storage traffic between the NAS and the ESX is something that doesn’t have to be firewalled in my opinion and the less latency the better! So there are two ways I could go with this; I could create a seperate vmkernel adapter in the server-subnet, which makes sure all the traffic is handled on layer 2 and no routing is involved or even better (imo) is to create a complete separate network for storage traffic. It is good to know that vSphere will look for the closest matching subnet for handling the NFS traffic. If you want to know how NFS traffic is handled by ESX, I suggest reading the posts on Chris Wahl his blog, he did a perfect job on explaining this.

Mikrotik Switch configuration

First of all I created a new vlan (vlan-id 5) and decided which subnet I wanted to use (192.168.5.0/24). First of all I created this vlan on my Mikrotik. More about how vlan traffic is handled by Mikrotik in an earlier post. First of all create the vlan

[admin@Mikrotik] > interface ethernet switch vlan

[admin@Mikrotik] /interface ethernet switch vlan> add vlan-id=5 ports=TRK-NAS,ETH09-NUC01,ETH10-NUC02

Next make sure the ports will handle the vlan traffic correctly

[admin@Mikrotik] > interface ethernet switch egress-vlan-tag

[admin@Mikrotik] /interface ethernet switch egress-vlan-tag> add vlan-id=5 tagged-ports=TRK-NAS,ETH09-NUC01,ETH10,NUC02

Synology extra interface configuration

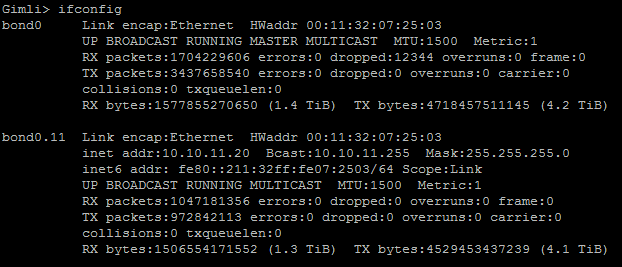

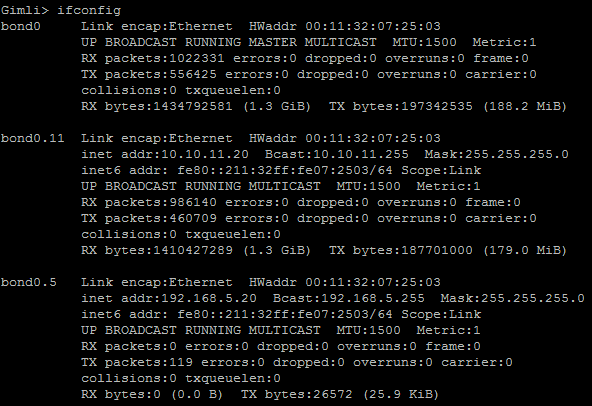

The next part is making a second interface on the Synology NAS. Login to the NAS with SSH and run an ifconfig.

As you can see in my example, I have a bonding interface (already tagged) on vlan 11, we can easily add an extra vlan by running the following command, the number after the dot is the vlan-id.

vi /etc/sysconfig/network-scripts/ifcfg-bond0.5

In the text-editor I gave in the following parameters. (You can write, after pressing the insert button).

DEVICE=bond0.5

VLAN_ROW_DEVICE=bond0

VLAN_ID=5

ONBOOT=yes

BOOTPROTO=static

IPADDR=192.168.5.20

NETMASK=255.255.255.0

When you’re done, save the file en quit vi (this can be done by typing the following “:wq”). The safest way to test the configuration is by rebooting the NAS (a network restart should also be sufficient). After the reboot run ifconfig again.

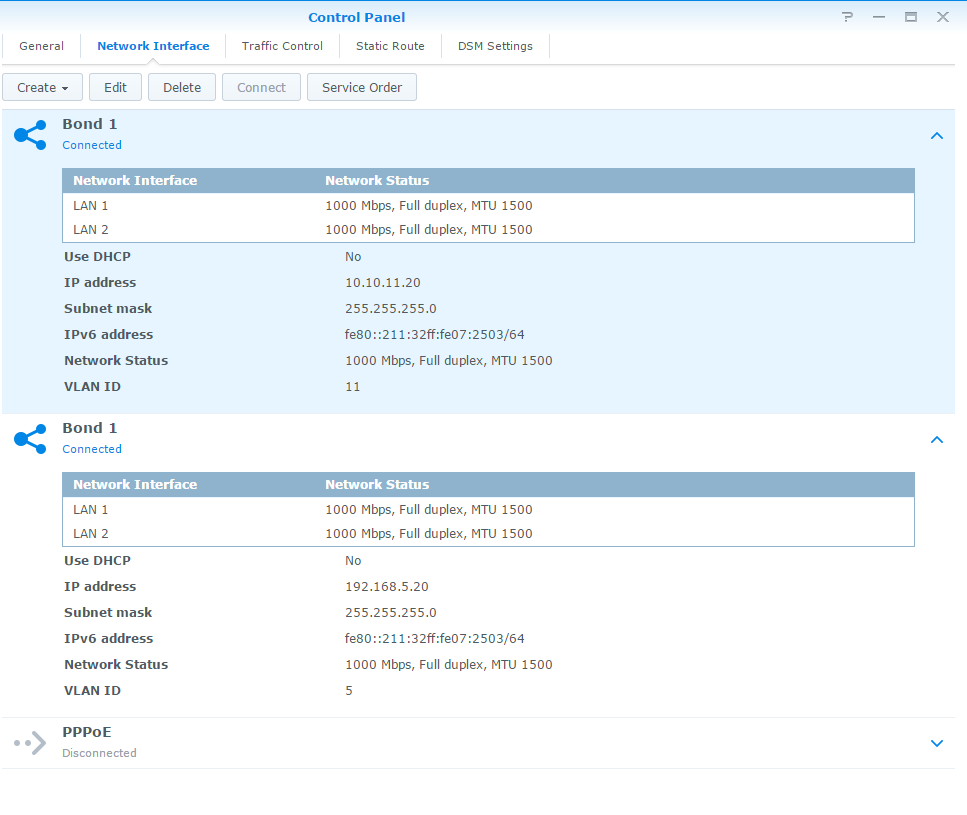

You can also check the configuration from the DSM web-interface.

ESX configuration

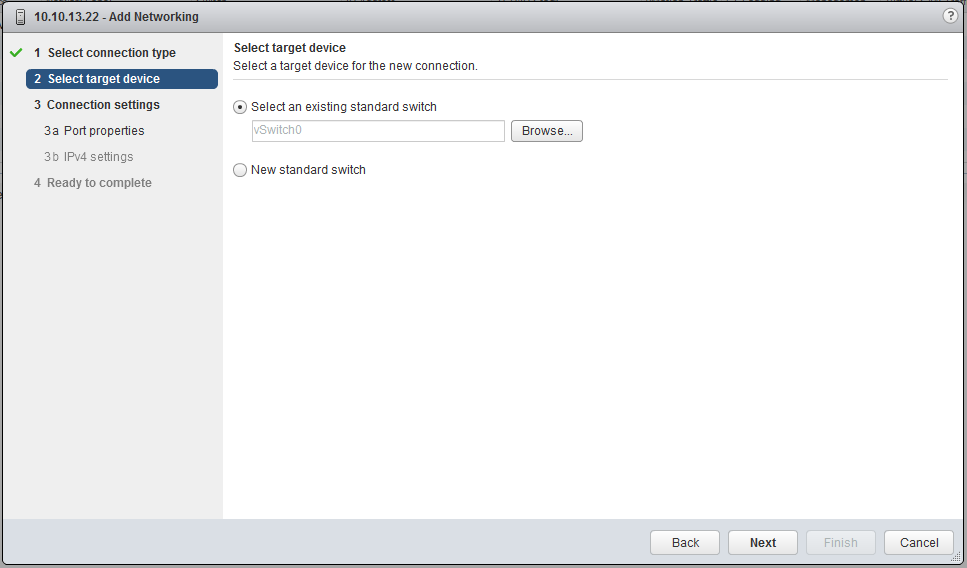

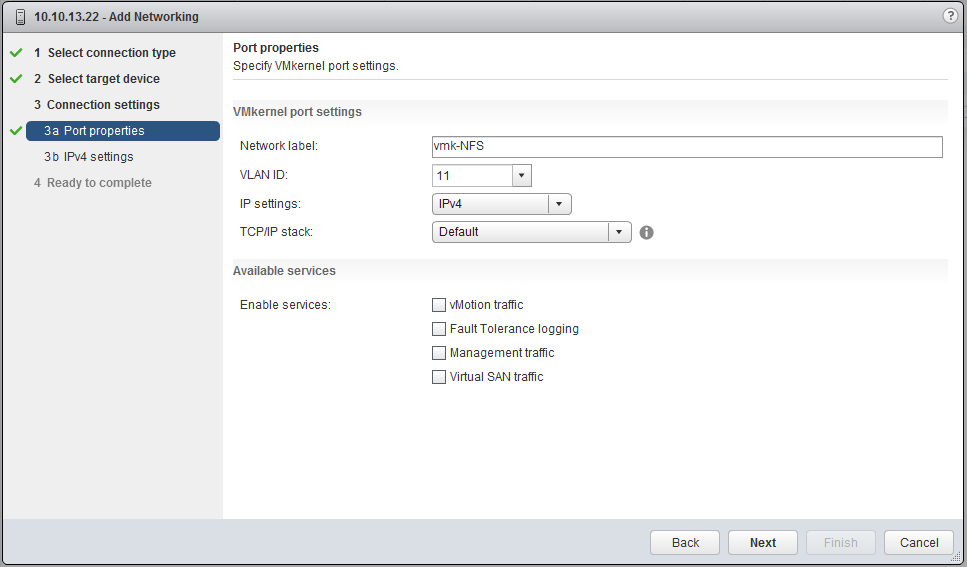

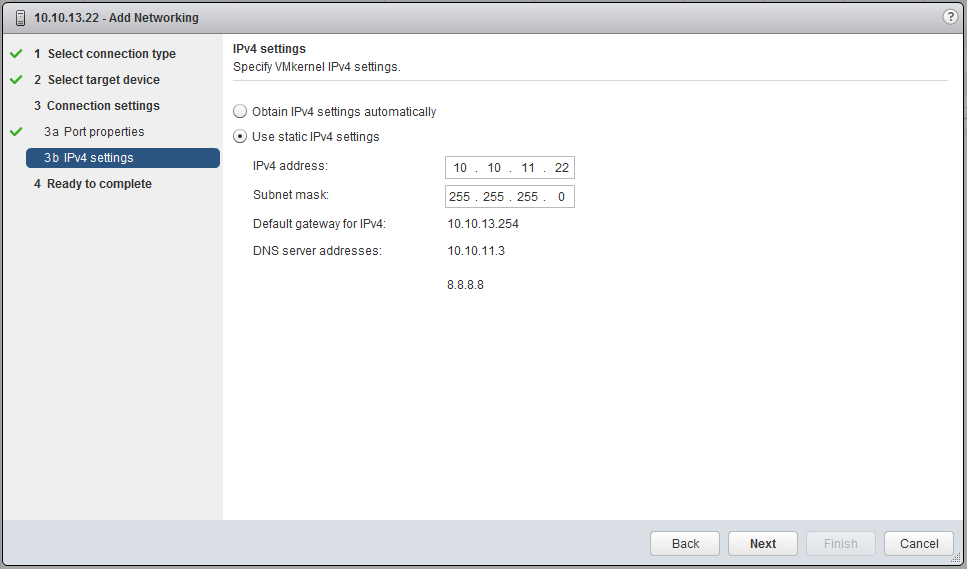

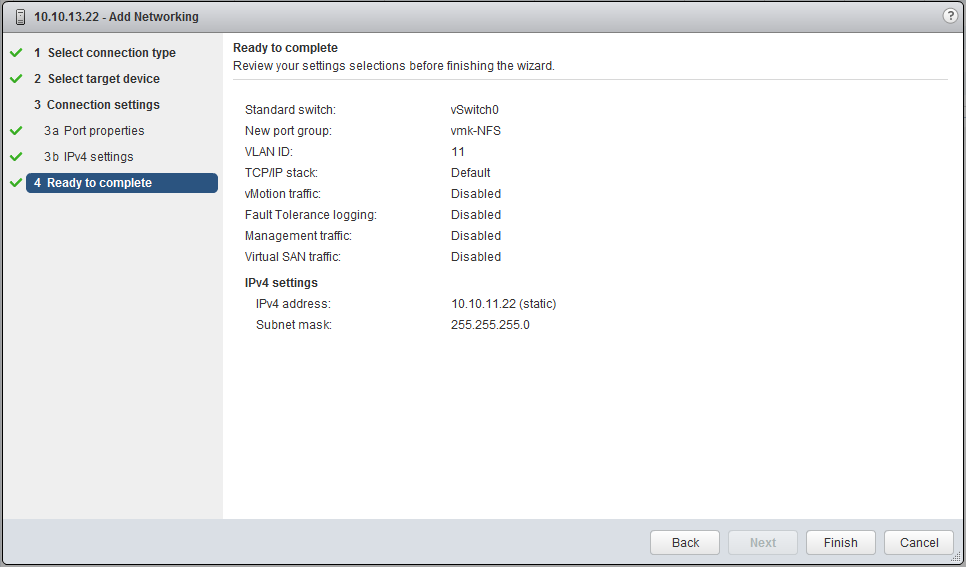

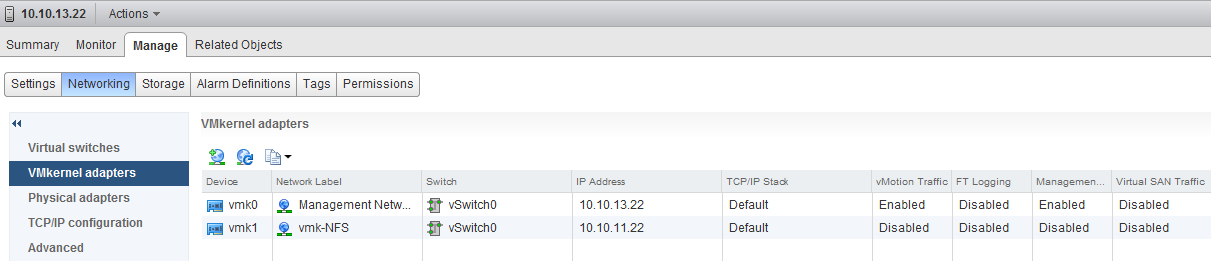

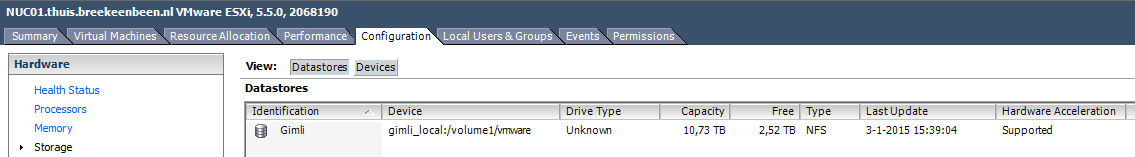

The last part is the configuration of ESX. The sad part is that the easiest way to “re-add” the datastore is to remove all your VM’s from your inventory and re-add them, after making the configuration changes. First of all, create a new vmkernel adapter, which will handle all the NFS traffic. The VLAN-ID in the images should be 5 and the IP should be 192.168.5.22. I changed this later.

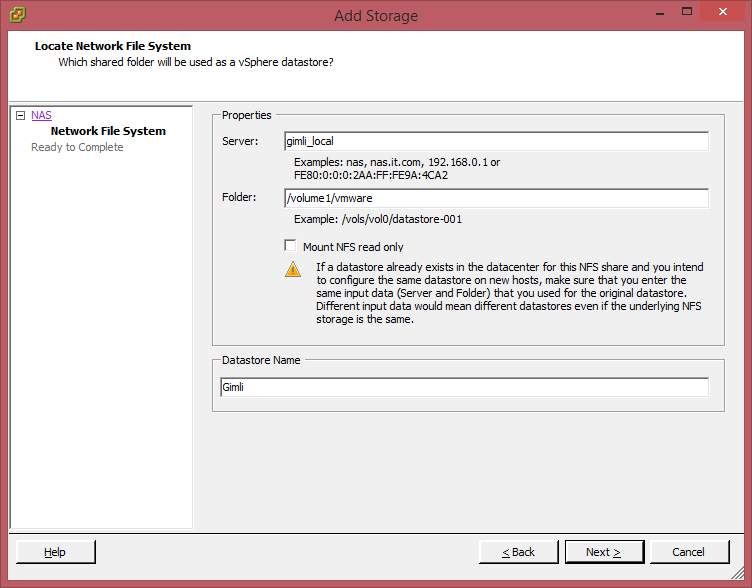

When you’re done, empty your inventory, and remove the datastore. I decided to add the datastore with a DNS name in case the IP number needs to change again, so I don’t have to do the “remove & add-to” inventory trick. If you don’t have a manage-able DNS server, you can also edit the /etc/hosts file of the ESX.

Now add the datastore (preferably by name) and add the VMs back to your inventory, start them up (don’t forget to answer the question about moved or copied). To verify if the storage really goes through the new subnet, you can run a netstat on the NAS, to check the connections. As you can see both hosts are using their new vmkernel interfaces to connect to the NAS.

Gimli> netstat -nat | grep 192.168

tcp 0 0 192.168.5.20:2049 192.168.5.22:876 ESTABLISHED

tcp 0 0 192.168.5.20:2049 192.168.5.21:717 ESTABLISHED

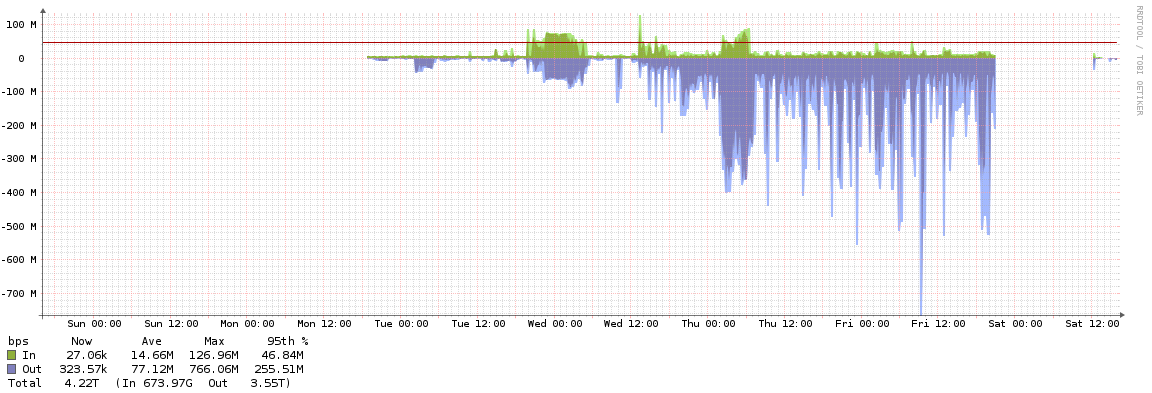

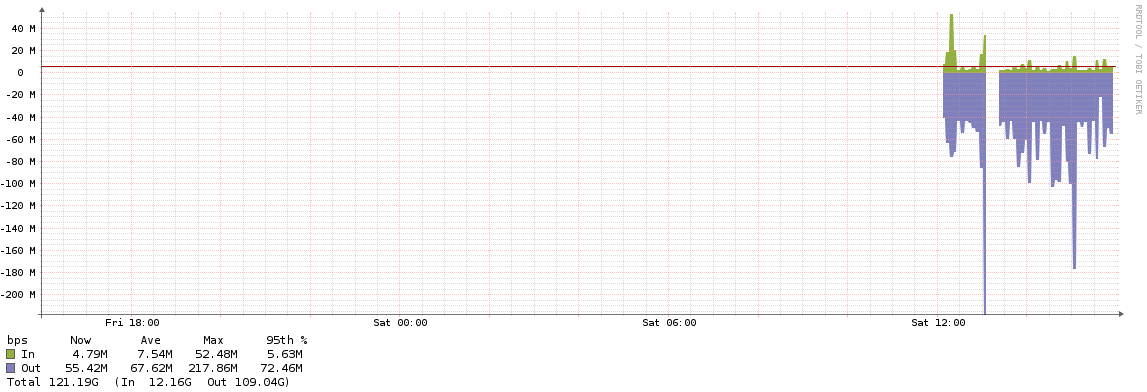

I can also see it in my network monitoring tool, you can see, that the network traffic on the “server-subnet”, bond0.11 is getting less and the network traffic on bond0.5, the storage-subnet, starts to grow.